Yann Neuhaus

CloudNativePG – Scaling up and down

By now, if you followed the previous posts (here, here, here, here and here), we know quite a bit about how to use CloudNativePG to deploy a PostgreSQL cluster and how to get detailed information about the deployment. What we’ll look at in this post is how you can leverage this deployment to scale the cluster up and down. This might be important if you have changing workloads throughout the day or the week and your application is able to distribute read only workloads across the PostgreSQL replicas.

When we look at what we have now, we do see this:

minicube@micro-minicube:~> kubectl-cnpg status my-pg-cluster

Cluster Summary

Name: my-pg-cluster

Namespace: default

System ID: 7378131726640287762

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:16.2

Primary instance: my-pg-cluster-1

Primary start time: 2024-06-08 13:59:26 +0000 UTC (uptime 88h35m7s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/26000000 (Timeline: 1 - WAL File: 000000010000000000000012)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

my-pg-cluster-ca 2024-09-06 13:54:17 +0000 UTC 86.31

my-pg-cluster-replication 2024-09-06 13:54:17 +0000 UTC 86.31

my-pg-cluster-server 2024-09-06 13:54:17 +0000 UTC 86.31

Continuous Backup status

Not configured

Physical backups

No running physical backups found

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

my-pg-cluster-2 0/26000000 0/26000000 0/26000000 0/26000000 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-3 0/26000000 0/26000000 0/26000000 0/26000000 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Managed roles status

No roles managed

Tablespaces status

No managed tablespaces

Pod Disruption Budgets status

Name Role Expected Pods Current Healthy Minimum Desired Healthy Disruptions Allowed

---- ---- ------------- --------------- ----------------------- -------------------

my-pg-cluster replica 2 2 1 1

my-pg-cluster-primary primary 1 1 1 0

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

my-pg-cluster-1 37 MB 0/26000000 Primary OK BestEffort 1.23.1 minikube

my-pg-cluster-2 37 MB 0/26000000 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-3 37 MB 0/26000000 Standby (async) OK BestEffort 1.23.1 minikube

We have a primary instance running in pod my-pg-cluster-1, and we have two replicas in asynchronous mode running in pods my-pg-cluster-2 and my-pg-cluster-3. Let’s assume we have an increasing workload and we want to have two more replicas. There are two ways in which you can do this. The first one is to change the configuration of the cluster in the yaml and then re-apply the configuration. This is the configuration as it is now:

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: my-pg-cluster

spec:

instances: 3

bootstrap:

initdb:

database: db1

owner: db1

dataChecksums: true

walSegmentSize: 32

localeCollate: 'en_US.utf8'

localeCType: 'en_US.utf8'

postInitSQL:

- create user db2

- create database db2 with owner = db2

postgresql:

parameters:

work_mem: "12MB"

pg_stat_statements.max: "2500"

pg_hba:

- host all all 192.168.122.0/24 scram-sha-256

storage:

size: 1Gi

All we need to do is to change the number of instances we want to have. With the current value of three, we get one primary and two replicas. If we want to have two more replicas, change this to five and re-apply:

minicube@micro-minicube:~> grep instances pg.yaml

instances: 5

minicube@micro-minicube:~> kubectl apply -f pg.yaml

cluster.postgresql.cnpg.io/my-pg-cluster configured

By monitoring the pods you can follow the progress of bringing up two new pods and attaching the replicas to the current cluster:

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (32m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (32m ago) 2d

my-pg-cluster-3 1/1 Running 1 (32m ago) 2d

my-pg-cluster-4 0/1 PodInitializing 0 3s

my-pg-cluster-4-join-kqgwp 0/1 Completed 0 11s

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (33m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (33m ago) 2d

my-pg-cluster-3 1/1 Running 1 (33m ago) 2d

my-pg-cluster-4 1/1 Running 0 42s

my-pg-cluster-5 1/1 Running 0 19s

Now we see five pods, as requested, and looking at the PostgreSQL streaming replication configuration confirms that we now have four replicas:

minicube@micro-minicube:~> kubectl-cnpg status my-pg-cluster

Cluster Summary

Name: my-pg-cluster

Namespace: default

System ID: 7378131726640287762

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:16.2

Primary instance: my-pg-cluster-1

Primary start time: 2024-06-08 13:59:26 +0000 UTC (uptime 88h43m54s)

Status: Cluster in healthy state

Instances: 5

Ready instances: 5

Current Write LSN: 0/2C000060 (Timeline: 1 - WAL File: 000000010000000000000016)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

my-pg-cluster-ca 2024-09-06 13:54:17 +0000 UTC 86.30

my-pg-cluster-replication 2024-09-06 13:54:17 +0000 UTC 86.30

my-pg-cluster-server 2024-09-06 13:54:17 +0000 UTC 86.30

Continuous Backup status

Not configured

Physical backups

No running physical backups found

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

my-pg-cluster-2 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-3 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-4 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-5 0/2C000060 0/2C000060 0/2C000060 0/2C000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Managed roles status

No roles managed

Tablespaces status

No managed tablespaces

Pod Disruption Budgets status

Name Role Expected Pods Current Healthy Minimum Desired Healthy Disruptions Allowed

---- ---- ------------- --------------- ----------------------- -------------------

my-pg-cluster replica 4 4 3 1

my-pg-cluster-primary primary 1 1 1 0

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

my-pg-cluster-1 37 MB 0/2C000060 Primary OK BestEffort 1.23.1 minikube

my-pg-cluster-2 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-3 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-4 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-5 37 MB 0/2C000060 Standby (async) OK BestEffort 1.23.1 minikube

If you want to scale this down again (maybe because the workload decreased), you can do that in the same way by reducing the number of instances from five to three in the cluster definition, or by directly scaling the cluster down with kubectl:

minicube@micro-minicube:~> kubectl scale --replicas=2 -f pg.yaml

cluster.postgresql.cnpg.io/my-pg-cluster scaled

Attention: Replicas in this context does not mean streaming replication replicas. It means replicas in the context of Kubernetes, so if you do it like above, the result will be one primary and one replica:

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (39m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (39m ago) 2d1h

What you probably really want is this (to get back to the initial state of the cluster):

minicube@micro-minicube:~> kubectl scale --replicas=3 -f pg.yaml

cluster.postgresql.cnpg.io/my-pg-cluster scaled

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-6-join-747nx 0/1 Pending 0 1s

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (41m ago) 2d1h

my-pg-cluster-6-join-747nx 1/1 Running 0 5s

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-6 0/1 Running 0 5s

my-pg-cluster-6-join-747nx 0/1 Completed 0 14s

...

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-2 1/1 Running 1 (42m ago) 2d1h

my-pg-cluster-6 1/1 Running 0 16s

What you shouldn’t do is to mix both ways of scaling, for one reason: If you scale up or down by using “kubectl scale”, this will not modify your cluster configuration file. There we still have five instances:

minicube@micro-minicube:~> grep instances pg.yaml

instances: 5

Our recommendation is, to do this only by modifying the configuration and re-apply afterwards. This ensures, that you always have the “reality” in the configuration file, and not a mix of live state and desired state.

In the next we’ll look into storage, because you want your databases to be persistent and fast.

L’article CloudNativePG – Scaling up and down est apparu en premier sur dbi Blog.

CloudNativePG – The kubectl plugin

As we’re getting more and more familiar with CloudNativePG, now it’s time to get more information about our cluster, either for monitoring or troubleshooting purposes. Getting information about the general state of our cluster can be easily done by using kubectl.

For listing the global state of our cluster, you can do:

minicube@micro-minicube:~> kubectl get cluster -A

NAMESPACE NAME AGE INSTANCES READY STATUS PRIMARY

default my-pg-cluster 41h 3 3 Cluster in healthy state my-pg-cluster-1

As we’ve seen in the previous posts (here, here, here and here) kubectl can also be used to get information about the pods and services of the deployment:

minicube@micro-minicube:~> kubectl get pods

NAME READY STATUS RESTARTS AGE

my-pg-cluster-1 1/1 Running 0 108m

my-pg-cluster-2 1/1 Running 0 103m

my-pg-cluster-3 1/1 Running 0 103m

minicube@micro-minicube:~> kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d

my-pg-cluster-r ClusterIP 10.111.113.4 <none> 5432/TCP 41h

my-pg-cluster-ro ClusterIP 10.110.137.246 <none> 5432/TCP 41h

my-pg-cluster-rw ClusterIP 10.100.77.15 <none> 5432/TCP 41h

What we cannot see easily with kubectl is information related to PostgreSQL itself. But as kubectl can be extended with plugins, CloudNativePG comes with a plugin for kubectl which is called “cnpg“. There are several installation methods available, we’ll go for the scripted version:

minicube@micro-minicube:~> curl -sSfL https://github.com/cloudnative-pg/cloudnative-pg/raw/main/hack/install-cnpg-plugin.sh | sudo sh -s -- -b /usr/local/bin

cloudnative-pg/cloudnative-pg info checking GitHub for latest tag

cloudnative-pg/cloudnative-pg info found version: 1.23.1 for v1.23.1/linux/x86_64

cloudnative-pg/cloudnative-pg info installed /usr/local/bin/kubectl-cnpg

A very nice feature of this plugin is, that it comes with support for auto completion of the available commands, but this needs to be configured before you can use it. You can use the plugin itself to generate the completion script for one of the supported shells (bash in my case):

minicube@micro-minicube:~> kubectl cnpg completion

Generate the autocompletion script for kubectl-cnpg for the specified shell.

See each sub-command's help for details on how to use the generated script.

Usage:

kubectl cnpg completion [command]

Available Commands:

bash Generate the autocompletion script for bash

fish Generate the autocompletion script for fish

powershell Generate the autocompletion script for powershell

zsh Generate the autocompletion script for zsh

...

minicube@micro-minicube:~> kubectl cnpg completion bash > kubectl_complete-cnpg

minicube@micro-minicube:~> chmod +x kubectl_complete-cnpg

minicube@micro-minicube:~> sudo mv kubectl_complete-cnpg /usr/local/bin/

From now, tab completion is working:

minicube@micro-minicube:~> kubectl-cnpg [TAB][TAB]

backup (Request an on-demand backup for a PostgreSQL Cluster)

certificate (Create a client certificate to connect to PostgreSQL using TLS and Certificate authentication)

completion (Generate the autocompletion script for the specified shell)

destroy (Destroy the instance named [cluster]-[node] or [node] with the associated PVC)

fencing (Fencing related commands)

fio (Creates a fio deployment, pvc and configmap)

help (Help about any command)

hibernate (Hibernation related commands)

install (CNPG installation commands)

logs (Collect cluster logs)

maintenance (Sets or removes maintenance mode from clusters)

pgadmin4 (Creates a pgadmin deployment)

pgbench (Creates a pgbench job)

promote (Promote the pod named [cluster]-[node] or [node] to primary)

psql (Start a psql session targeting a CloudNativePG cluster)

publication (Logical publication management commands)

reload (Reload the cluster)

report (Report on the operator)

restart (Restart a cluster or a single instance in a cluster)

snapshot (command removed)

status (Get the status of a PostgreSQL cluster)

subscription (Logical subscription management commands)

version (Prints version, commit sha and date of the build)

As you can see, quite a few commands are available, but for the scope of this post, we’ll only use the commands for getting logs and detailed information about our cluster. Obviously the “status” command should give us some global information about the cluster, and actually it will give us much more:

minicube@micro-minicube:~> kubectl-cnpg status my-pg-cluster

Cluster Summary

Name: my-pg-cluster

Namespace: default

System ID: 7378131726640287762

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:16.2

Primary instance: my-pg-cluster-1

Primary start time: 2024-06-08 13:59:26 +0000 UTC (uptime 42h49m23s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/1E000000 (Timeline: 1 - WAL File: 00000001000000000000000E)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

my-pg-cluster-ca 2024-09-06 13:54:17 +0000 UTC 88.21

my-pg-cluster-replication 2024-09-06 13:54:17 +0000 UTC 88.21

my-pg-cluster-server 2024-09-06 13:54:17 +0000 UTC 88.21

Continuous Backup status

Not configured

Physical backups

No running physical backups found

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

my-pg-cluster-2 0/1E000000 0/1E000000 0/1E000000 0/1E000000 00:00:00 00:00:00 00:00:00 streaming async 0 active

my-pg-cluster-3 0/1E000000 0/1E000000 0/1E000000 0/1E000000 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Managed roles status

No roles managed

Tablespaces status

No managed tablespaces

Pod Disruption Budgets status

Name Role Expected Pods Current Healthy Minimum Desired Healthy Disruptions Allowed

---- ---- ------------- --------------- ----------------------- -------------------

my-pg-cluster replica 2 2 1 1

my-pg-cluster-primary primary 1 1 1 0

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

my-pg-cluster-1 37 MB 0/1E000000 Primary OK BestEffort 1.23.1 minikube

my-pg-cluster-2 37 MB 0/1E000000 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-3 37 MB 0/1E000000 Standby (async) OK BestEffort 1.23.1 minikube

This is quite some amount of information and tells us a lot about our cluster, including:

- We have one primary node and two replicas in asynchronous replication (this comes from the three instances we specified in the cluster configuration)

- All instances are healthy and there is no replication lag

- The version of PostgreSQL is 16.2

- The configuration is using replication slots

- Information about the certificates used for encrypted traffic

- We do not have configured any backups (this will be the topic of one of the next posts)

If you want too see even more information, including e.g. the configuration of PostgreSQL, pass the “–verbose” flag to the status command:

minicube@micro-minicube:~> kubectl-cnpg status my-pg-cluster --verbose

Cluster Summary

Name: my-pg-cluster

Namespace: default

System ID: 7378131726640287762

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:16.2

Primary instance: my-pg-cluster-1

Primary start time: 2024-06-08 13:59:26 +0000 UTC (uptime 42h57m30s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/20000110 (Timeline: 1 - WAL File: 000000010000000000000010)

PostgreSQL Configuration

archive_command = '/controller/manager wal-archive --log-destination /controller/log/postgres.json %p'

archive_mode = 'on'

archive_timeout = '5min'

cluster_name = 'my-pg-cluster'

dynamic_shared_memory_type = 'posix'

full_page_writes = 'on'

hot_standby = 'true'

listen_addresses = '*'

log_destination = 'csvlog'

log_directory = '/controller/log'

log_filename = 'postgres'

log_rotation_age = '0'

log_rotation_size = '0'

log_truncate_on_rotation = 'false'

logging_collector = 'on'

max_parallel_workers = '32'

max_replication_slots = '32'

max_worker_processes = '32'

pg_stat_statements.max = '2500'

port = '5432'

restart_after_crash = 'false'

shared_memory_type = 'mmap'

shared_preload_libraries = 'pg_stat_statements'

ssl = 'on'

ssl_ca_file = '/controller/certificates/client-ca.crt'

ssl_cert_file = '/controller/certificates/server.crt'

ssl_key_file = '/controller/certificates/server.key'

ssl_max_protocol_version = 'TLSv1.3'

ssl_min_protocol_version = 'TLSv1.3'

unix_socket_directories = '/controller/run'

wal_keep_size = '512MB'

wal_level = 'logical'

wal_log_hints = 'on'

wal_receiver_timeout = '5s'

wal_sender_timeout = '5s'

work_mem = '12MB'

cnpg.config_sha256 = 'db8a255b574978eb43a479ec688a1e8e72281ec3fa03b59bcb3cf3bf9b997e67'

PostgreSQL HBA Rules

#

# FIXED RULES

#

# Grant local access ('local' user map)

local all all peer map=local

# Require client certificate authentication for the streaming_replica user

hostssl postgres streaming_replica all cert

hostssl replication streaming_replica all cert

hostssl all cnpg_pooler_pgbouncer all cert

#

# USER-DEFINED RULES

#

host all all 192.168.122.0/24 scram-sha-256

#

# DEFAULT RULES

#

host all all all scram-sha-256

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

my-pg-cluster-ca 2024-09-06 13:54:17 +0000 UTC 88.21

my-pg-cluster-replication 2024-09-06 13:54:17 +0000 UTC 88.21

my-pg-cluster-server 2024-09-06 13:54:17 +0000 UTC 88.21

Continuous Backup status

Not configured

Physical backups

No running physical backups found

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot Slot Restart LSN Slot WAL Status Slot Safe WAL Size

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ---------------- ---------------- --------------- ------------------

my-pg-cluster-2 0/20000110 0/20000110 0/20000110 0/20000110 00:00:00 00:00:00 00:00:00 streaming async 0 active 0/20000110 reserved NULL

my-pg-cluster-3 0/20000110 0/20000110 0/20000110 0/20000110 00:00:00 00:00:00 00:00:00 streaming async 0 active 0/20000110 reserved NULL

Unmanaged Replication Slot Status

No unmanaged replication slots found

Managed roles status

No roles managed

Tablespaces status

No managed tablespaces

Pod Disruption Budgets status

Name Role Expected Pods Current Healthy Minimum Desired Healthy Disruptions Allowed

---- ---- ------------- --------------- ----------------------- -------------------

my-pg-cluster replica 2 2 1 1

my-pg-cluster-primary primary 1 1 1 0

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

my-pg-cluster-1 37 MB 0/20000110 Primary OK BestEffort 1.23.1 minikube

my-pg-cluster-2 37 MB 0/20000110 Standby (async) OK BestEffort 1.23.1 minikube

my-pg-cluster-3 37 MB 0/20000110 Standby (async) OK BestEffort 1.23.1 minikube

The other important command when it comes to troubleshooting is the “logs” command (the “-f” is for tail):

minicube@micro-minicube:~> kubectl-cnpg logs cluster my-pg-cluster -f

...

{"level":"info","ts":"2024-06-10T08:51:59Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving via Barman Cloud","logging_pod":"my-pg-cluster-1","walName":"pg_wal/00000001000000000000000F","currentPrimary":"my-pg-cluster-1","targetPrimary":"my-pg-cluster-1"}

{"level":"info","ts":"2024-06-10T08:52:00Z","logger":"postgres","msg":"record","logging_pod":"my-pg-cluster-1","record":{"log_time":"2024-06-10 08:52:00.121 UTC","process_id":"1289","session_id":"66669223.509","session_line_num":"4","session_start_time":"2024-06-10 05:41:55 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 10 buffers (0.1%); 0 WAL file(s) added, 0 removed, 0 recycled; write=1.005 s, sync=0.006 s, total=1.111 s; sync files=5, longest=0.002 s, average=0.002 s; distance=64233 kB, estimate=64233 kB; lsn=0/20000060, redo lsn=0/1E006030","backend_type":"checkpointer","query_id":"0"}}

{"level":"info","ts":"2024-06-10T08:56:59Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving via Barman Cloud","logging_pod":"my-pg-cluster-1","walName":"pg_wal/000000010000000000000010","currentPrimary":"my-pg-cluster-1","targetPrimary":"my-pg-cluster-1"}

This gives you the PostgreSQL as well as the operator logs. Both, the “status” and the “logs” command are essential for troubleshooting.

In the next post we’ll look at scaling the cluster up and down.

L’article CloudNativePG – The kubectl plugin est apparu en premier sur dbi Blog.

How to Fix the etcd Error: “etcdserver: mvcc: database space exceeded” in a Patroni cluster

If you’re encountering the etcd error “etcdserver: mvcc: database space exceeded,” it means your etcd database has exceeded its storage limit. This can occur due to a variety of reasons, such as a large number of revisions or excessive data accumulation. However, there’s no need to panic; this issue can be resolved effectively.

I know that there is already plenty of blogs or posts about etcd, but 99% of them are related to Kubernetes topic where etcd is managed in containers. In my case, etcd cluster is installed on three SLES VMs alongside a Patroni cluster. Using etcd with Patroni enhances the reliability, scalability, and manageability of PostgreSQL clusters by providing a robust distributed coordination mechanism for high availability and configuration management. So dear DBA, I hope that this blog will help you ! Below, I’ll outline the steps to fix this error and prevent this error from happening.

Where did this issue happenThe first time I saw this issue was at a customer. They had a Patroni cluster with 3 nodes, including 2 PostgreSQL instance. They noticed Patroni issue on their monitoring so I was asked to have a look. In the end, the Patroni issue was caused by the etcd database being full. I find the error logs from the etcd service status.

Understanding the ErrorBefore diving into the solution, it’s essential to understand what causes this error. Etcd, a distributed key-value store, utilizes a Multi-Version Concurrency Control (MVCC) model to manage data. When the database space is exceeded, it indicates that there’s too much data stored, potentially leading to performance issues or even service disruptions. By default, the database size is limited to 2Gb, which should be more than enough, but without knowing this limitation, you might encounter the same issue than me one day.

Pause Patroni Cluster ManagementUtilize Patroni’s patronictl command to temporarily suspend cluster management, effectively halting automated failover processes and configuration adjustments while conducting the fix procedure. (https://patroni.readthedocs.io/en/latest/pause.html)

# patronictl pause --wait

'pause' request sent, waiting until it is recognized by all nodes

Success: cluster management is pausedThe first step is to adjust the etcd configuration file to optimize database space usage. Add the following parameters to your etcd configuration file on all nodes of the cluster.

max-wals: 2

auto-compaction-mode: periodic

auto-compaction-retention: "36h"Below, I’ll provide you with some explanation concerning the three parameters we are adding to the configuration file:

- max-wals: 2:

- This parameter specifies the maximum number of write-ahead logs (WALs) that etcd should retain before compacting them. WALs are temporary files used to store recent transactions before they are written to the main etcd database.

- By limiting the number of WALs retained, you control the amount of temporary data stored, which helps in managing disk space usage. Keeping a low number of WALs ensures that disk space is not consumed excessively by temporary transaction logs.

- auto-compaction-mode: periodic:

- This parameter determines the mode of automatic database compaction. When set to “periodic,” etcd automatically compacts its database periodically based on the configured retention period.

- Database compaction removes redundant or obsolete data, reclaiming disk space and preventing the database from growing indefinitely. Periodic compaction ensures that old data is regularly cleaned up, maintaining optimal performance and disk space usage.

- auto-compaction-retention: “36h”:

- This parameter defines the retention period for data before it becomes eligible for automatic compaction. It specifies the duration after which etcd should consider data for compaction.

- In this example, “36h” represents a retention period of 36 hours. Any data older than 36 hours is eligible for compaction during the next periodic compaction cycle.

- Adjusting the retention period allows you to control how long historical data is retained in the etcd database. Shorter retention periods result in more frequent compaction and potentially smaller database sizes, while longer retention periods preserve historical data for a longer duration.

Ensure to restart the etcd service on each node after updating the configuration. You can restart the nodes one by one and monitor the cluster’s status between each restart.

Remove Excessive Data and Defragment the DatabaseExecute various etcd commands to remove excessive data from the etcd database and defragment it. These commands need to be run on each etcd nodes. Complete the whole procedure node by node. In our case, I suggest that we start the process on our third nodes, where we don’t have any PostgreSQL instance running.

# Obtain the current revision

$ rev=$(ETCDCTL_API=3 etcdctl --endpoints=<your-endpoints> endpoint status --write-out="json" | grep -o '"revision":[0-9]*' | grep -o '[0-9].*')

# Compact all old revisions

$ ETCDCTL_API=3 etcdctl compact $rev

# Defragment the excessive space (execute for each etcd node)

$ ETCDCTL_API=3 etcdctl defrag --endpoints=<your-endpoints>

# Disarm alarm

$ ETCDCTL_API=3 etcdctl alarm disarm

# Check the cluster's status again

$ etcdctl endpoint status --cluster -w table

- if the $rev variable contains three times the same number, only use one instance of the number

- The first time you run the compact/defrag commands, you may receive an etcd error. To be on the safe side, run the command on the third node first. In case of an error, you may need to restart the etcd service on the node before continuing. From a blog, this potential error might only concerned etcd version 3.5.x : “There is a known issue that etcd might run into data inconsistency issue if it crashes in the middle of an online defragmentation operation using

etcdctlor clientv3 API. All the existing v3.5 releases are affected, including 3.5.0 ~ 3.5.5. So please useetcdutlto offline perform defragmentation operation, but this requires taking each member offline one at a time. It means that you need to stop each etcd instance firstly, then perform defragmentation usingetcdutl, start the instance at last. Please refer to the issue 1 in public statement.” (https://etcd.io/blog/2023/how_to_debug_large_db_size_issue/#:~:text=Users%20can%20configure%20the%20quota,sufficient%20for%20most%20use%20cases) - Run the defrag command for each node and verify that the DB size has properly reduce each time.

After completing the steps above, ensure there are no more alarms, and the database size has reduced. Monitor the cluster’s performance to confirm that the issue has been resolved successfully.

Resume Patroni Cluster ManagementAfter confirming the successful clean of the alarms, proceed to re-enable cluster management, enabling Patroni to resume its standard operations and exit maintenance mode.

# patronictl resume --wait

'resume' request sent, waiting until it is recognized by all nodes

Success: cluster management is resumedTo conclude, facing the “etcdserver: mvcc: database space exceeded” error can be concerning, but with the right approach, it’s entirely manageable. By updating the etcd configuration and executing appropriate commands to remove excess data and defragment the database, you can optimize your etcd cluster’s performance and ensure smooth operation. Remember to monitor the cluster regularly to catch any potential issues early on. With these steps, you can effectively resolve the etcd database space exceeded error and maintain a healthy etcd environment.

Useful LinksFind more information about etcd database size: How to debug large db size issue?https://etcd.io/blog/2023/how_to_debug_large_db_size_issue/#:~:text=Users%20can%20configure%20the%20quota,sufficient%20for%20most%20use%20cases.

Official etcd operations guide: https://etcd.io/docs/v3.5/op-guide/

L’article How to Fix the etcd Error: “etcdserver: mvcc: database space exceeded” in a Patroni cluster est apparu en premier sur dbi Blog.

Upgrade etcd in a patroni cluster

In a distributed database system like PostgreSQL managed by Patroni, etcd plays a critical role as the distributed key-value store for cluster coordination and configuration. As your system evolves, upgrading etcd becomes necessary to leverage new features, bug fixes, and security enhancements. However, upgrading etcd in a live cluster requires careful planning and execution to ensure data integrity. In this guide, we’ll walk through the process of upgrading etcd from version 3.4.25 to 3.5.12 in a Patroni cluster, based on the detailed notes I took during the upgrade process.

Check the upgrade checklistBefore trying to upgrade, it is important to have a look at all the deprecated features and at the upgrade requirements. In our case, to upgrade to version 3.5.x, it is mandatory that the running cluster is healthy and at least in version 3.4 already.

You can find all this information on the official etcd documentation:

https://etcd.io/docs/v3.3/upgrades/upgrade_3_5/

During the upgrade process, an etcd cluster can accommodate a mix of etcd member versions, functioning based on the protocol of the lowest common version present. The cluster achieves the upgraded status only when all its members are updated to version 3.5. Internally, etcd members negotiate among themselves to establish the overall cluster version, influencing the reported version and the features supported by the cluster.

In most scenarios, transitioning from etcd 3.4 to 3.5 can be accomplished seamlessly through a rolling upgrade process, ensuring zero downtime. Sequentially halt the etcd v3.4 processes, substituting them with etcd v3.5 processes. Upon completion of the migration to v3.5 across all nodes, the enhanced functionalities introduced in v3.5 become accessible to the cluster.

Preparing for the UpgradeBefore starting the upgrade process, it’s essential to make adequate preparations to minimize any potential risks or disruptions. Here are some preliminary steps:

- Check current etcd version

[pgt001] postgres@patroni-1:/postgres/app/postgres> etcdctl version

etcdctl version: 3.4.25

API version: 3.4- Backup etcd data

Use etcdctl to create a snapshot of the etcd data. This ensures that you have a fallback option in case something goes wrong during the upgrade process.

[pgt001] postgres@patroni-1:~> etcdctl snapshot save backup.db

{"level":"info","ts":1710507460.523724,"caller":"snapshot/v3_snapshot.go:119","msg":"created temporary db file","path":"backup.db.part"}

{"level":"info","ts":"2024-03-15T13:57:40.538461+0100","caller":"clientv3/maintenance.go:200","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":1710507460.539052,"caller":"snapshot/v3_snapshot.go:127","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":"2024-03-15T13:57:40.548342+0100","caller":"clientv3/maintenance.go:208","msg":"completed snapshot read; closing"}

{"level":"info","ts":1710507460.5576544,"caller":"snapshot/v3_snapshot.go:142","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"57 kB","took":0.030259485}

{"level":"info","ts":1710507460.5580025,"caller":"snapshot/v3_snapshot.go:152","msg":"saved","path":"backup.db"}

Snapshot saved at backup.db

[pgt001] postgres@patroni-1:~> ll

total 60

-rw------- 1 postgres postgres 57376 Mar 15 13:57 backup.db

[pgt001] postgres@patroni-1:~> etcdctl --write-out=table snapshot status backup.db

+----------+----------+------------+------------+

| HASH | REVISION | TOTAL KEYS | TOTAL SIZE |

+----------+----------+------------+------------+

| 29c96081 | 107 | 117 | 57 kB |

+----------+----------+------------+------------+- Pause Cluster Management

Use Patroni’s patronictl to pause cluster management. This prevents any automated failover or configuration changes during the upgrade process. (https://patroni.readthedocs.io/en/latest/pause.html)

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> patronictl pause --wait

'pause' request sent, waiting until it is recognized by all nodes

Success: cluster management is pausedNow that you’ve prepared your cluster for the upgrade, you can proceed with the actual upgrade steps. All the steps are performed node by node, as mentioned earlier. I will start the upgrade on the third node of my cluster, patroni-3.

- Stop etcd

Stop the etcd service. This ensures that no changes are made to the cluster while the upgrade is in progress.

[pg133] postgres@patroni-3:/postgres/app/postgres/local/dmk/bin> sudo systemctl stop etcd- Extract and Install New etcd Version

Download the new etcd binary and extract it. Then, replace the existing etcd binaries with the new ones.

[pg133] postgres@patroni-3:/postgres/app/postgres/local/dmk/bin> tar axf etcd-v3.5.12-linux-amd64.tar.gz

[pg133] postgres@patroni-3:/postgres/app/postgres/local/dmk/bin> mv etcd-v3.5.12-linux-amd64/etcd* /postgres/app/postgres/local/dmk/bin/

[pg133] postgres@patroni-3:/postgres/app/postgres/local/dmk/bin> etcdctl version

etcdctl version: 3.5.12

API version: 3.5- Start etcd

Start the upgraded etcd service

[pg133] postgres@patroni-3:/postgres/app/postgres/local/dmk/bin> sudo systemctl start etcd

[pg133] postgres@patroni-3:/postgres/app/postgres/local/dmk/bin> sudo systemctl status etcd

● etcd.service - dbi services etcd service

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; preset: enabled)

Active: active (running) since Fri 2024-03-15 14:02:39 CET; 10s ago

Main PID: 1561 (etcd)

Tasks: 9 (limit: 9454)

Memory: 13.1M

CPU: 369ms

CGroup: /system.slice/etcd.service

└─1561 /postgres/app/postgres/local/dmk/bin/etcd --config-file /postgres/app/postgres/local/dmk/etc/etcd.conf

Mar 15 14:02:38 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:38.292751+0100","caller":"etcdserver/server.go:783","msg":"initialized peer connections; fast-forwarding electi>

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.282054+0100","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"raft.node: f1457fc5460d0329 elected>

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.302529+0100","caller":"etcdserver/server.go:2068","msg":"published local member to cluster through raft","lo>

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.302985+0100","caller":"embed/serve.go:103","msg":"ready to serve client requests"}

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.30307+0100","caller":"embed/serve.go:103","msg":"ready to serve client requests"}

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.302942+0100","caller":"etcdmain/main.go:44","msg":"notifying init daemon"}

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.303671+0100","caller":"etcdmain/main.go:50","msg":"successfully notified init daemon"}

Mar 15 14:02:39 patroni-3 systemd[1]: Started etcd.service - dbi services etcd service.

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.304964+0100","caller":"embed/serve.go:187","msg":"serving client traffic insecurely; this is strongly discou>

Mar 15 14:02:39 patroni-3 etcd[1561]: {"level":"info","ts":"2024-03-15T14:02:39.305719+0100","caller":"embed/serve.go:187","msg":"serving client traffic insecurely; this is strongly discou>

After each etcd upgrade, it’s always nice to verify the health and functionality of the etcd and Patroni cluster. You can notice from the etcdtcl command that the version was upgraded on the third node.

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> etcdctl endpoint status --cluster -w table

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| http://192.168.56.123:2379 | 90015c533cbf2e84 | 3.4.25 | 61 kB | false | false | 15 | 150 | 150 | |

| http://192.168.56.124:2379 | 9fe85e3cebf257e3 | 3.4.25 | 61 kB | false | false | 15 | 150 | 150 | |

| http://192.168.56.125:2379 | f1457fc5460d0329 | 3.5.12 | 61 kB | true | false | 15 | 150 | 150 | |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> patronictl list

+ Cluster: pgt001 (7346518467491201916) ----------+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+----------------+---------+-----------+----+-----------+

| pgt001_1 | 192.168.56.123 | Leader | running | 5 | |

| pgt001_2 | 192.168.56.124 | Replica | streaming | 5 | 0 |

+----------+----------------+---------+-----------+----+-----------+

Maintenance mode: on- Upgrading etcd on the second node of the cluster

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> sudo systemctl stop etcd

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> tar axf etcd-v3.5.12-linux-amd64.tar.gz

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> mv etcd-v3.5.12-linux-amd64/etcd* /postgres/app/postgres/local/dmk/bin/

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> etcdctl version

etcdctl version: 3.5.12

API version: 3.5

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> sudo systemctl start etcd

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> sudo systemctl status etcd

● etcd.service - dbi services etcd service

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; preset: enabled)

Active: active (running) since Fri 2024-03-15 14:04:46 CET; 4s ago

Main PID: 1791 (etcd)

Tasks: 7 (limit: 9454)

Memory: 9.7M

CPU: 295ms

CGroup: /system.slice/etcd.service

└─1791 /postgres/app/postgres/local/dmk/bin/etcd --config-file /postgres/app/postgres/local/dmk/etc/etcd.conf

Mar 15 14:04:45 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:45.690431+0100","caller":"rafthttp/stream.go:274","msg":"established TCP streaming connection with remote peer">

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.739502+0100","logger":"raft","caller":"etcdserver/zap_raft.go:77","msg":"raft.node: 9fe85e3cebf257e3 elected>

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.75204+0100","caller":"etcdserver/server.go:2068","msg":"published local member to cluster through raft","loc>

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.752889+0100","caller":"embed/serve.go:103","msg":"ready to serve client requests"}

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.753543+0100","caller":"etcdmain/main.go:44","msg":"notifying init daemon"}

Mar 15 14:04:46 patroni-2 systemd[1]: Started etcd.service - dbi services etcd service.

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.754213+0100","caller":"embed/serve.go:187","msg":"serving client traffic insecurely; this is strongly discou>

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.757187+0100","caller":"embed/serve.go:103","msg":"ready to serve client requests"}

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.757933+0100","caller":"embed/serve.go:187","msg":"serving client traffic insecurely; this is strongly discou>

Mar 15 14:04:46 patroni-2 etcd[1791]: {"level":"info","ts":"2024-03-15T14:04:46.75994+0100","caller":"etcdmain/main.go:50","msg":"successfully notified init daemon"}

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> patronictl list

+ Cluster: pgt001 (7346518467491201916) ----------+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+----------------+---------+-----------+----+-----------+

| pgt001_1 | 192.168.56.123 | Leader | running | 5 | |

| pgt001_2 | 192.168.56.124 | Replica | streaming | 5 | 0 |

+----------+----------------+---------+-----------+----+-----------+

Maintenance mode: on

- Upgrading etcd on the third node of the cluster

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> sudo systemctl stop etcdNow that we stopped etcd on the server where is our Patroni leader node, let’s take a look at our patroni cluster status.

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> patronictl list

2024-03-15 14:05:52,778 - ERROR - Failed to get list of machines from http://192.168.56.123:2379/v3beta: MaxRetryError("HTTPConnectionPool(host='192.168.56.123', port=2379): Max retries exceeded with url: /version (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f3584365590>: Failed to establish a new connection: [Errno 111] Connection refused'))")

+ Cluster: pgt001 (7346518467491201916) ----------+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+----------------+---------+-----------+----+-----------+

| pgt001_1 | 192.168.56.123 | Leader | running | 5 | |

| pgt001_2 | 192.168.56.124 | Replica | streaming | 5 | 0 |

+----------+----------------+---------+-----------+----+-----------+

Maintenance mode: on

[pgt001] postgres@patroni-2:/postgres/app/postgres/local/dmk/bin> sq

psql (14.7 dbi services build)

Type "help" for help.

postgres=# exitWe can notice that our Patroni cluster is still up and running and that PostgreSQL cluster is still reachable. Also, thanks to patroni maintenance mode, no failover or configuration changes are happening.

Let’s continue with the installation

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> tar axf etcd-v3.5.12-linux- amd64.tar.gz

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> mv etcd-v3.5.12-linux-amd64 /etcd* /postgres/app/postgres/local/dmk/bin/

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> etcdctl version

etcdctl version: 3.5.12

API version: 3.5

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> sudo systemctl start etcd

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> sudo systemctl status etcd

● etcd.service - dbi services etcd service

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; preset: enabled)

Active: active (running) since Fri 2024-03-15 14:07:12 CET; 3s ago

Main PID: 1914 (etcd)

Tasks: 7 (limit: 9454)

Memory: 15.9M

CPU: 160ms

CGroup: /system.slice/etcd.service

└─1914 /postgres/app/postgres/local/dmk/bin/etcd --config-file /postgres/app/postgres/local/dmk/etc/etcd.conf

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.180191+0100","caller":"etcdserver/server.go:2068","msg":"published local member to cluster through raft","lo>

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.180266+0100","caller":"embed/serve.go:103","msg":"ready to serve client requests"}

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.181162+0100","caller":"embed/serve.go:103","msg":"ready to serve client requests"}

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.182377+0100","caller":"embed/serve.go:187","msg":"serving client traffic insecurely; this is strongly discou>

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.182625+0100","caller":"embed/serve.go:187","msg":"serving client traffic insecurely; this is strongly discou>

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.183861+0100","caller":"etcdmain/main.go:44","msg":"notifying init daemon"}

Mar 15 14:07:12 patroni-1 systemd[1]: Started etcd.service - dbi services etcd service.

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.187771+0100","caller":"etcdmain/main.go:50","msg":"successfully notified init daemon"}

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.195369+0100","caller":"membership/cluster.go:576","msg":"updated cluster version","cluster-id":"571a53e78674>

Mar 15 14:07:12 patroni-1 etcd[1914]: {"level":"info","ts":"2024-03-15T14:07:12.195541+0100","caller":"api/capability.go:75","msg":"enabled capabilities for version","cluster-version":"3.5>We now have upgraded etcd on all our nodes and we need to control the status of our clusters.

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> patronictl list

+ Cluster: pgt001 (7346518467491201916) ----------+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+----------------+---------+-----------+----+-----------+

| pgt001_1 | 192.168.56.123 | Leader | running | 5 | |

| pgt001_2 | 192.168.56.124 | Replica | streaming | 5 | 0 |

+----------+----------------+---------+-----------+----+-----------+

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> etcdctl endpoint status --cluster -w table

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| http://192.168.56.123:2379 | 90015c533cbf2e84 | 3.5.12 | 61 kB | false | false | 15 | 150 | 150 | |

| http://192.168.56.124:2379 | 9fe85e3cebf257e3 | 3.5.12 | 61 kB | false | false | 15 | 150 | 150 | |

| http://192.168.56.125:2379 | f1457fc5460d0329 | 3.5.12 | 61 kB | true | false | 15 | 150 | 150 | |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> etcdctl version

etcdctl version: 3.5.12

API version: 3.5Once you’ve confirmed that the upgrade was successful, resume cluster management to allow Patroni to resume its normal operations and quit maintenance mode.

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> patronictl resume --wait

'resume' request sent, waiting until it is recognized by all nodes

Success: cluster management is resumed

[pgt001] postgres@patroni-1:/postgres/app/postgres/local/dmk/bin> patronictl list

+ Cluster: pgt001 (7346518467491201916) ----------+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------+----------------+---------+-----------+----+-----------+

| pgt001_1 | 192.168.56.123 | Leader | running | 5 | |

| pgt001_2 | 192.168.56.124 | Replica | streaming | 5 | 0 |

+----------+----------------+---------+-----------+----+-----------+Upgrading etcd in a Patroni cluster is a critical maintenance task that requires careful planning and execution. By following the steps outlined in this guide and leveraging the detailed notes taken during the upgrade process, you can ensure a smooth and successful upgrade while ensuring data integrity. Remember to always test the upgrade process in a staging environment before performing it in production to mitigate any potential risks.

L’article Upgrade etcd in a patroni cluster est apparu en premier sur dbi Blog.

What DEVs need to hear from a DBA and why SQL and RDBMS still matters…

The Average case

The Average case

As a skilled developer, you are well-versed in the latest trends and fully capable of building an application from scratch. From the frontend to the RESTful API backend, through to the ORM and the database, you have experienced it all.

Having participated in numerous projects and developed substantial code, you have begun to receive feedback from your Sysadmins and users of applications you programmed a few years ago. The application is starting to have performance issues…

-“Simple ! The number of users increased ! The database is now 600GB ! We should provide more resources to the PODs and VMs (my code is good and don’t need rewriting; refactoring was done properly…).”

Makes sense, but the sysadmins tripled the number of CPU and Memory without any benefits whatsoever.

-“Look the database server is too slow the queries are not fast enough !

A DBA should be able to fix that !”

-“We don’t have any, we should call a consultant to make a performance review and help us out of this mess. Customers are still complaining, it is time to invest…”

That’s where a DBA consultant (me) comes along and performs required maintenance and tries to apply standard best practices, tune some parameters here or there and exposes the most intensive queries that need tuning….

Then the DEV Team explains they are using an ORM and can’t “tune Queries” or touch the SQL code because they don’t want to, it would have too many implications on business logic and architecture, and also, they don’t know SQL all that much; it is an old language they used back in their early days as developer.

1. Why SQL and RDBMS (still)?

1. Why SQL and RDBMS (still)?

As a developer don’t overlook SQL and RDBMS like PostgreSQL. It is still the best way to store and access data when relation between data is important and when that relation can be defined beforehand and is stable (which is usually the case in businesses).

In the following example there are several benefits/reasons for using a RDBMS :

- Data integrity: Enforced by foreign keys and other constraints the table design ensures that the data remains accurate, and consistent, preventing issues like orphaned records.

In this case, an order cannot exist without a customer, and a line item cannot exist without an associated product and order. - Complex Queries: RDBMS are made for JOINs between tables. All the architecture of an RDBMS is helping providing facilities to retrieve and store data efficiently.

- Transaction support: If your requirements are like in this example, an order with multiple steps in it (updating inventory, creating an order record…) must complete successfully together or not at all.

SELECT o.order_id, c.name, p.name, od.quantity, p.price, (od.quantity * p.price) AS total_cost

FROM Orders o

JOIN Customers c ON o.customer_id = c.customer_id

JOIN Order_Details od ON o.order_id = od.order_id

JOIN Products p ON od.product_id = p.product_id

WHERE c.name = 'Bob Kowalski'

ORDER BY o.order_date DESC

LIMIT 100;

NoSQL DB like MongoDB or Cassandra are designed for scalability and flexibility in storing unstructured data, complex joins and transactions are not supported in the same way. They are more suitable if your data structure changes frequently and the application demands high write throughput and horizontal scalability.

In our example an RDBMS like MySQL, MariaDB or PostgreSQL is the best choice to store the “statefullness” of your application but you could use NoSQL DBMS like Redis to cache some data and help not putting too much pressure on the RDBMS by making less calls to it. No one needs to retrieve the same data 50000 times per minute… Use the cache Luke… use the cache…

It would be silly to tell you “Don’t use NoSQL, RDBMS is king !”.

Use them both and understand their limitations.

DEVs love their NoSQL because having a schema-less architecture helps them scale easily and achieve better integration with CI/CD processes, which is traditionally difficult with RDBMS, bonus point for not having to talk to a DBA (which I understand, I talk to myself already enough:)…

In this instance, and perhaps in life overall, one should consider bypassing standardized models and rules established by predecessors only if you comprehend the implications and the original reasons for their existence.

Yes and no. ORMs are one of the good things that happened to Developers and DBAs. It helps creating better code in most of the case and they become quite tunable nowadays.

So please keep your ORM, you need it today since it creates a level of abstraction that is helpful for simple queries and scalable logic and gets you faster and closer to delivery.

The thing is that you have to understand their limitations. And as DBA I am fine with using 100% of ORMs SQL, up until you have a performance issue.

If for some reason your application gets some success and is used enough so that you are being pushed by the business to do better, ask the DBA to provide you the top 10 queries of the last weeks and understand how you can tune those and maybe not use the ORM in some cases.

When the ORM is producing suboptimal queries (queries not performing well enough for business), it might be for several reasons :

- Abstraction: To produce queries, an ORM has to generalize them is such a way that it can cause performance issues. Because the ORM can’t think of all the cases and doesn’t know your data.

- N+1 Problem: Commonly known, this issue is generating more roundtrip calls than it’s advisable to the RDBMS and has been well documented in most documentation since the early 2000s. In general, just think about the data you need and try to understand if you can solve it by creating a query with appropriate JOINs and fetch the required data in one go.

ORMs (Hibernate or Entity for example) allow specifying a batch size for fetching related entities. This means instead of one query per related entity, the ORM will group several entities into fewer queries. Some other ways on the RDBMS side can mitigate those aspects as well like proper indexing, views, materialized views,… - Complex joins: What? an RDBMS can’t handle a lot of JOINs ?! It depends on what you mean by a lot, but generally, RDBMS like SQL Server are having a hard time with more than 6 or 7 JOINs, PostgreSQL you could go a bit further and use GEQO algorithm at the cost of planning time of your execution plan, but overall, an optimizer can’t produce a proper query plan when the cardinality tends towards infinity… which is the case when your ORM queries are generating queries with 86 JOINs !

Note: Understand that it is not just about the number of JOINs. Schema Design indexes and the optimizer capabilities are critical aspects of performance levels, most of the time people are hitting limitations in a RDBMS because they don’t recognize their existence.

If you want to get more info and best practices about ORM I suggest reading this : Hibernate Best Practices (thorben-janssen.com)

4. Performance optimization paths for RDBMS:In addition to what has been said already, you can also optimize your instance to work better.

Earlier, I discussed the limitations on the number of JOINs an optimizer can handle. It’s crucial to recognize that an optimizer’s capabilities are affected by schema design, indexes, and the queries themselves! Like said often by Brent Ozar, you have 3 buttons that you can play with to get better performance : TABLE design, QUERY design and Resources.

People often play with the third one because it is easy to request for more CPU and Memory… cloud providers make you pay for that, it is less the case nowadays though.

So for me you can request additional training for your team, numerous companies offer performance training dedicated for Oracle, SQL Server, PostgreSQL, MariaDB, MySQL,…. and DBI services is one of them.

But you could also first, take leverage of modern monitoring and tools like Query Store on SQL Server or PGANALYZE on PostgreSQL to understand better where your performance bottleneck is.

In most cases, it is easy to query for the top 20 resource-intensive queries, usually in those you will have 3 or 4 that are consuming more resources by 10x. Try to understand why that is and get specialized help if you can’t.

- It is still a matter of using the proper tool for the proper job. Building an architecture based on ORM is a good practice and even if you need to store JSON in the database, I am still up for that ( PostgreSQL supports it in the best way possible ).

- Be prepared that if along the way you need to get more performance at scale, you’ll need to be prepared for a hybrid approach. Using ORM for simple CRUD and raw SQL for the relevant queries. ORM do support writing Native SQL Queries, don’t be afraid to use it.

- In addition use cache capabilities when you can.

- Consult with your Sysadmins and DBAs, they know stuff on your app you want to hear. Trust me on that, they want to help (most of the time:).

Often different teams don’t have the same monitoring tools and don’t look at the same metrics. It is important to understand why. - Be sure to update your knowledge. Often enough I still see DEVs that still are having hard time understanding key concepts or evolution of the best practices… (stored procedures, anti or semi-joins, ….etc).

I do understand that most DEVs are not building a new app every morning from scratch most of them inherit code and logic from old applications build some time ago, architectural decisions are not so often in their hands. Even then, I think we are at a cornerstone of IT evolution, and the next years will be filled with opportunities and new tech, but for the past years most of the projects I have seen failed performance wise, were due to miss communication and over simplifications of complex systems. Platform engineering should solve that and put away the overhead of managing all systems without knowing them all…

L’article What DEVs need to hear from a DBA and why SQL and RDBMS still matters… est apparu en premier sur dbi Blog.

Alfresco – Mass removal/cleanup of documents

At a customer, I recently had a case where a mass-import job was executed on an interface that, in the background, uses Alfresco for document and metadata storage. From the point of view of the interface team, there was no problem as documents were properly being created in Alfresco (although performance wasn’t exceptional). However, after some time, our monitoring started sending us alerts that Solr indexing nearly stopped / was very slow. I might talk about the Solr part in a future blog but what happened is that the interface was configured to import documents into Alfresco in a way that caused too many documents in a single folder.

Too many documents in the same folder of AlfrescoThe interface was trying to import documents in the folder “YYYY/MM/DD/HH” (YYYY being the year, MM the month, DD the day and HH the hour). This might be fine for Business-As-Usual (BAU), when the load isn’t too high, but when mass-importing documents, that meant several thousand documents per folder (5’000, 10’000, 20’000, …), the limit being what Alfresco can ingest in an hour or what the interface manages to send. As you probably know, Alfresco definitively doesn’t like folders with much more than a thousand nodes inside (in particular because of associations and indexing design)… When I saw that, I asked the interface team to stop the import job, but unfortunately, it wasn’t stopped right away and almost 190 000 documents were already imported into Alfresco.

Alfresco APIs for the win?You cannot really let Alfresco in this state since Solr would heavily be impacted by this kind of situation and any change to a document in such folder could result in heavy load. Therefore, from my point of view, the best is to remove the documents and execute a new/correct import with a better distribution of documents per folder.

A first solution could be to restore the DB to a point in time before the activity started, but that means a downtime and anything else that happened in the meantime would be lost. A second option would be to find all the documents imported and remove them through API. As you might know, Share UI will not really be useful in this case since Share will either crash or just take way too long to open the folder, so don’t even try… And even if it is able to somehow open the folder containing XX’XXX nodes, you probably shouldn’t try to delete it because it will take forever, and you will not be able to know what’s the status of this process that runs in the background. Therefore, from my point of view, the only reasonable solution is through API.

Finding documents to deleteAs mentioned, Solr indexing was nearly dead, so I couldn’t rely on it to find what was imported recently. Using REST-API could be possible but there are some limitations when working with huge set of results. In this case, I decided to go with a simple DB query (if you are interested in useful Alfresco DB queries), listing all documents created since the start of the mass-import by the interface user:

SQL> SELECT n.id AS "Node ID",

n.store_id AS "Store ID",

n.uuid AS "Document ID (UUID)",

n.audit_creator AS "Creator",

n.audit_created AS "Creation Date",

n.audit_modifier AS "Modifier",

n.audit_modified AS "Modification Date",

n.type_qname_id

FROM alfresco.alf_node n,

alfresco.alf_node_properties p

WHERE n.id=p.node_id

AND p.qname_id=(SELECT id FROM alf_qname WHERE local_name='content')

AND n.audit_created>='2023-11-23T19:00:00Z'

AND n.audit_creator='itf_user'

AND n.audit_created is not null;

In case the interface isn’t using a dedicated user for the mass-import process, it might be a bit more difficult to find the correct list of documents to be removed, as you would need to take care not to remove the BAU documents… Maybe using a recursive query based on the folder on which the documents were imported or some custom type/metadata or similar. The result of the above query was put in a text file for the processing:

alfresco@acs01:~$ cat alfresco_documents.txt

Node ID Store ID Document ID (UUID) Creator Creation Date Modifier Modification Date TYPE_QNAME_ID

--------- -------- ------------------------------------ --------- ------------------------- --------- ------------------------- -------------

156491155 6 0f16ef7a-4cf1-4304-b578-71480570c070 itf_user 2023-11-23T19:01:02.511Z itf_user 2023-11-23T19:01:03.128Z 265

156491158 4 2f65420a-1105-4306-9733-210501ae7efb itf_user 2023-11-23T19:01:03.198Z itf_user 2023-11-23T19:01:03.198Z 265

156491164 6 a208d56f-df1a-4f2f-bc73-6ab39214b824 itf_user 2023-11-23T19:01:03.795Z itf_user 2023-11-23T19:01:03.795Z 265

156491166 4 908d385f-d6bb-4b94-ba5c-6d6942bb75c3 itf_user 2023-11-23T19:01:03.918Z itf_user 2023-11-23T19:01:03.918Z 265

...

159472069 6 cabf7343-35c4-4e8b-8a36-0fa0805b367f itf_user 2023-11-24T07:50:20.355Z itf_user 2023-11-24T07:50:20.355Z 265

159472079 4 1bcc7301-97ab-4ddd-9561-0ecab8d09efb itf_user 2023-11-24T07:50:20.522Z itf_user 2023-11-24T07:50:20.522Z 265

159472098 6 19d1869c-83d9-449a-8417-b460ccec1d60 itf_user 2023-11-24T07:50:20.929Z itf_user 2023-11-24T07:50:20.929Z 265

159472107 4 bcd0f8a2-68b3-4cc9-b0bd-2af24dc4ff43 itf_user 2023-11-24T07:50:21.074Z itf_user 2023-11-24T07:50:21.074Z 265

159472121 6 74bbe0c3-2437-4d16-bfbc-97bfa5a8d4e0 itf_user 2023-11-24T07:50:21.365Z itf_user 2023-11-24T07:50:21.365Z 265

159472130 4 f984679f-378b-4540-853c-c36f13472fac itf_user 2023-11-24T07:50:21.511Z itf_user 2023-11-24T07:50:21.511Z 265

159472144 6 579a2609-f5be-47e4-89c8-daaa983a314e itf_user 2023-11-24T07:50:21.788Z itf_user 2023-11-24T07:50:21.788Z 265

159472153 4 7f408815-79e1-462a-aa07-182ee38340a3 itf_user 2023-11-24T07:50:21.941Z itf_user 2023-11-24T07:50:21.941Z 265

379100 rows selected.

alfresco@acs01:~$

The above Store ID of ‘6’ is for the ‘workspace://SpacesStore‘ (live document store) and ‘4’ is for the ‘workspace://version2Store‘ (version store):

SQL> SELECT id, protocol, identifier FROM alf_store;

ID PROTOCOL IDENTIFIER

--- ---------- ----------

1 user alfrescoUserStore

2 system system

3 workspace lightWeightVersionStore

4 workspace version2Store

5 archive SpacesStore

6 workspace SpacesStore

Looking at the number of rows for each Store ID gives the exact same number and confirms there are no deleted documents yet:

alfresco@acs01:~$ grep " 4 " alfresco_documents.txt | wc -l

189550

alfresco@acs01:~$

alfresco@acs01:~$ grep " 5 " alfresco_documents.txt | wc -l

0

alfresco@acs01:~$

alfresco@acs01:~$ grep " 6 " alfresco_documents.txt | wc -l

189550

alfresco@acs01:~$

Therefore, there is around 190k docs to remove in total, which is roughly the same number seen in the filesystem. The Alfresco ContentStore has a little bit more obviously since it also contains the BAU documents.

REST-API environment preparationNow that the list is complete, the next step is to extract the IDs of the documents, so that we can use these in REST-API calls. The IDs are simply the third column from the file (Document ID (UUID)):

alfresco@acs01:~$ grep " 6 " alfresco_documents.txt | awk '{print $3}' > input_file_6_id.txt

alfresco@acs01:~$

alfresco@acs01:~$ wc -l alfresco_documents.txt input_file_6_id.txt

379104 alfresco_documents.txt

189550 input_file_6_id.txt

568654 total

alfresco@acs01:~$

Now, to be able to execute REST-API calls, we will also need to define the username/password as well as the URL to be used. I executed the REST-API calls from the Alfresco server itself, so I didn’t really need to think too much about security, and I just used a BASIC authorization method using localhost and HTTPS. If you are executing that remotely, you might want to use tickets instead (and obviously keep the HTTPS protocol). To prepare for the removal, I defined the needed environment variables as follow:

alfresco@acs01:~$ alf_user=admin

alfresco@acs01:~$ read -s -p "Enter ${alf_user} password: " alf_passwd

Enter admin password:

alfresco@acs01:~$

alfresco@acs01:~$ auth=$(echo -n "${alf_user}:${alf_passwd}" | base64)

alfresco@acs01:~$

alfresco@acs01:~$ alf_base_url="https://localhost:8443/alfresco"

alfresco@acs01:~$ alf_node_url="${alf_base_url}/api/-default-/public/alfresco/versions/1/nodes"

alfresco@acs01:~$

alfresco@acs01:~$ input_file="$HOME/input_file_6_id.txt"

alfresco@acs01:~$ output_file="$HOME/output_file_6.txt"

alfresco@acs01:~$

With the above, we have our authorization string (base64 encoding of ‘username:password‘) as well as the Alfresco API URL. In case you wonder, you can find the definition of the REST-APIs in the Alfresco API Explorer. I also defined the input file, which contains all document IDs and an output file, which will contain the list of all documents processed, with the outcome of the command, to be able to check for any issues and follow the progress.

Deleting documents with REST-APIThe last step is now to create a small command/script that will execute the deletion of the documents in REST-API. Things to note here is that I’m using ‘permanent=true‘ so that the documents will not end-up in the trashcan but will be completely and permanently deleted. Therefore, you need to make sure the list of documents is correct! You can obviously set that parameter to false if you really want to, but please be aware that it will impact the performance quite a bit… Otherwise the command is fairly simple, it loops on the input file, execute the deletion query, get its output and log it:

alfresco@acs01:~$ while read -u 3 line; do

out=$(curl -k -s -X DELETE "${alf_node_url}/${line}?permanent=true" -H "accept: application/json" -H "Authorization: Basic ${auth}" | sed 's/.*\(statusCode":[0-9]*\),.*/\1/')

echo "${line} -- ${out}" >> "${output_file}"

done 3< "${input_file}"

The above is the simplest way/form of removal, with a single thread executed on a single server. You can obviously do multi-threaded deletions by splitting the input file into several and triggering commands in parallel, either on the same host or even on other hosts (if you have an Alfresco Cluster). In this example, I was able to get a consistent throughput of ~3130 documents deleted every 5 minutes, which means ~10.4 documents deleted per second. Again, that was on a single server with a single thread:

alfresco@acs01:~$ while true; do

echo "$(date) -- $(wc -l output_file_6.txt)"

sleep 300

done

Fri Nov 24 09:57:38 CET 2023 -- 810 output_file_6.txt

...

Fri Nov 24 10:26:55 CET 2023 -- 18920 output_file_6.txt

Fri Nov 24 10:31:55 CET 2023 -- 22042 output_file_6.txt

Fri Nov 24 10:36:55 CET 2023 -- 25180 output_file_6.txt

Fri Nov 24 10:41:55 CET 2023 -- 28290 output_file_6.txt

...

Since the cURL output (‘statusCode‘) is also recorded in the log file, I was able to confirm that 100% of the queries were successfully executed and all my documents were permanently deleted. With multi-threading and offloading to other members of the Cluster, it would have been possible to increase that by a lot (x5? x10? x20?) but that wasn’t needed in this case since the interface job needed to be updated before a new import could be triggered.

L’article Alfresco – Mass removal/cleanup of documents est apparu en premier sur dbi Blog.

Add authentication in a Feathers.js REST API

Following on from my previous articles: Create REST API from your database in minute with Feathers.js, and Add a UI to explore the Feathers.js API, today I want to add authentication in my Feathers.js REST API. Creation, update and delete operations will be authenticated, while read will remain public.

First step: add authentication to my applicationI’m using the code from my previous articles, and I add the authentication to my Feathers.js API. I use the CLI, it’s quick and easy:

npx feathers generate authenticationI want a simple user + password authentication. To achieve this, I’ve configured my authentication service as follows:

? Which authentication methods do you want to use? Email + Password

? What is your authentication service name? user

? What path should the service be registered on? users

? What database is the service using? SQL

? Which schema definition format do you want to use? TypeBoxNow I have an authentication method available in my application. If you look at the code, a new service users has been generated. It’s used to be retrieved users from the database. I won’t explain here how to create a user, but you can refer to the documentation.

Second step: authenticate the serviceAdditionally, I’m now going to define which method is authenticated in my service. To do this, I open the workshop.ts file. The important part of the code for this configuration is this:

// Initialize hooks

app.service(workshopPath).hooks({

around: {

all: [

schemaHooks.resolveExternal(workshopExternalResolver),

schemaHooks.resolveResult(workshopResolver)

]

},

before: {

all: [

schemaHooks.validateQuery(workshopQueryValidator),

schemaHooks.resolveQuery(workshopQueryResolver)

],

find: [],

get: [],

create: [

schemaHooks.validateData(workshopDataValidator),

schemaHooks.resolveData(workshopDataResolver)

],

patch: [

schemaHooks.validateData(workshopPatchValidator),

schemaHooks.resolveData(workshopPatchResolver)

],

remove: []

},

after: {

all: []

},

error: {

all: []

}

})I add the “authenticate(‘jwt’)” function in create, patch and remove into the before block. This function check the credentials before the call of the main function.

before: {

...

create: [

schemaHooks.validateData(workshopDataValidator),

schemaHooks.resolveData(workshopDataResolver),

authenticate('jwt')

],

patch: [

schemaHooks.validateData(workshopPatchValidator),

schemaHooks.resolveData(workshopPatchResolver),

authenticate('jwt')

],

remove: [authenticate('jwt')]

},The basic authentication (user + password from the db) is managed by Feathers.js, which generates a JWT token on login.

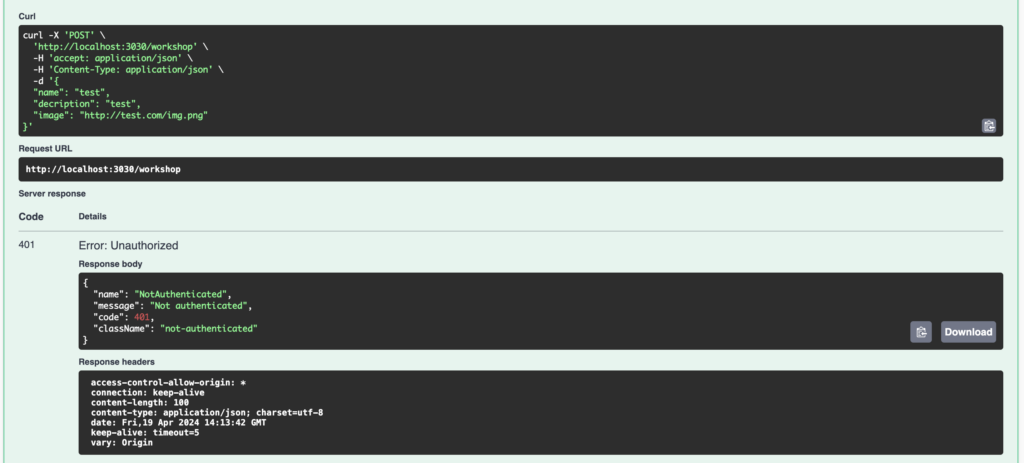

Verify service authenticationFinally, I test the authentication of my service. To do this, I use the Swagger interface configured earlier. The POST method for creating a new record is now authenticated:

Authentication works correctly, but as I don’t pass a JWT token, I get the error 401 Unauthorized.

ConclusionAdding authentication to a Feathers.js REST API is as easy as generating the service itself.

Feathers.js offers different authentication strategies, such as Local (user + password), JWT or oAuth. But if that’s not enough, you can also create a custom strategy.